Why? Because the complexity of today's biological reaction processes has long exceeded the range that the human brain can control.

In recent years, "AI+biomanufacturing" has become a hot topic.

But people who really do this job know that putting algorithms into fermentation tanks is not to tell stories, but to be forced out - the traditional days when teachers adjust parameters by "watching foam and smelling" are becoming more and more difficult.

一、 Why is it necessary to use it?

Four words: uncontrollable, unable to wait, unable to consume, unable to understand

1. Uncontrollable: Cells are more emotional than humans

A 50 ton fermentation tank contains trillions of microorganisms undergoing simultaneous metabolism.

A temperature difference of 1 ℃ and a 5% fluctuation in dissolved oxygen may cause the entire batch to deviate from the track. In the past, we relied on manual reading of tables every two hours and adjusting materials based on experience.

The result was that the same process would yield high today and be scrapped tomorrow.

Now with online Raman and NIR sensors, it is possible to "see" the concentration of sugar, acid, and protein in the jar in real time. But having data alone is useless - the key is how to use it.

The value of AI is to string hundreds of variables together and judge in milliseconds: "Should we lower the temperature or add more carbon sources in the next two hours.

This is not a substitute, it helps people see things that were originally invisible.

2. Cannot afford to wait: Protein engineering cannot rely on "trial and error heap time"

Previously, when conducting enzyme modification and screening 10000 mutant strains, only one or two of them may be useful. The cycle often takes two to three years, and the cost is frighteningly high.

Now someone has connected AI prediction models with automated experimental platforms: AI first calculates dozens of most promising mutation combinations, robots automatically synthesize, cultivate, and detect them, and the results are fed back to the model for iteration on the same day.

A certain team used this method to increase feed enzyme activity by 26 times and industrial enzyme selectivity by 90 times - from "looking for a needle in a haystack" to "precision guidance".

This is not showing off skills, it is compressing the research and development cycle from "year" to "week".

The core goal of the pilot test is not only to connect the route, but also to provide engineering data and boundary conditions for amplification. The earlier the limitations of the pilot equipment are taken into consideration, the smoother the road ahead will be.

3. Unable to afford: Every kilowatt hour of electricity and every gram of raw materials saved is profit

A pharmaceutical company once calculated that after the launch of an AI control system, the overall cost decreased by 10%. The big head comes from three pieces:

Energy consumption reduction (such as intelligent adjustment of mixing speed and ventilation volume);

Improved utilization rate of raw materials (replenishment is no longer a matter of "more than less");

Abnormal batch reduction (early warning of bacterial infection and metabolic abnormalities).

Even more ruthless is the continuous production process. For example, in perfusion culture, AI can dynamically adjust the column loading based on real-time product concentration, directly reducing the buffer solution dosage by 30% and increasing the overall line efficiency by 20%.

In bulk fermented products with already low gross profit margins, this is the lifeline.

4. Can't understand: from "experience breeding" to "designing life"

Rice breeding used to take 8-10 years, relying on generations of selection in the field.

Now, through high-throughput phenotype data and genomic AI models, it is possible to predict which hybrid combinations are most likely to produce high yields and resist diseases, skipping the middle generations and producing new strains within 3-5 years.

二、 How to do it?

Don't build castles in the air, integrate software and hardware, and implement a closed-loop approach

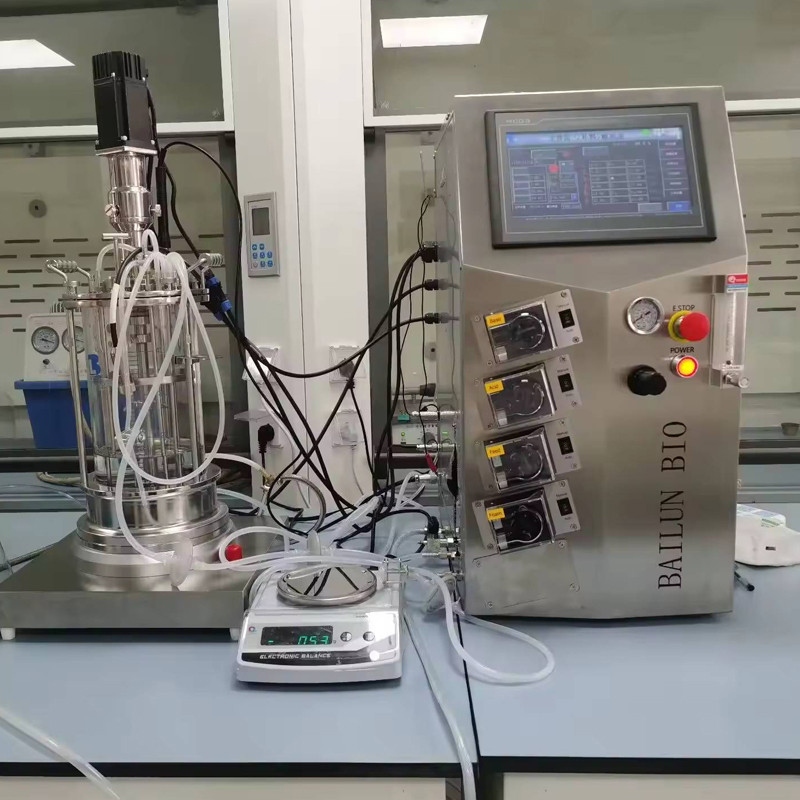

1. First build "nerve endings": make the device "speak"

Without high-quality data, AI is like cooking without rice. The key is not how many sensors to install, but to install the right sensors in the right places.

In addition to conventional pH, DO, and temperature, online metabolite monitoring (such as Raman spectroscopy for glucose and lactate) is also required in the fermentation tank;

Add flow meters and turbidity meters to the material conveying pipeline to prevent blockage or ratio drift;

The status of key valves and pumps should also be connected, otherwise if control instructions are not executed properly, it will be useless.

The goal is one: to enable every action in the physical world to be mapped in real-time in the digital world.

2. Building a "decision-making center" again: AI is not a black box, it needs to be able to explain and intervene

Many factories dare not use AI for fear of 'not knowing why it is adjusted like this'.

The solution is to integrate mechanistic models with data-driven approaches.

For example, microbial growth is governed by the Monod equation, while substrate consumption is subject to kinetic constraints.

By embedding these physical laws as "prior knowledge" into the neural network, the model will not provide anti common sense suggestions such as "continuing to rapidly replenish sugar while reducing dissolved oxygen to 0".

When the operator sees the system prompt 'Suggest reducing the feeding rate, as the current OUR is close to the critical value', they can immediately understand the logic and dare to follow the suggestion.

3. Dynamic tuning, not static control: time is the most critical variable

Fermentation is not a steady-state process.

The optimal strategies for the 20th and 100th hours are vastly different.

A good AI system must have the ability to predict the entire cycle of time series - not just looking at the present, but deducing the evolutionary path of the next few tens of hours.

For example, if the temperature is slightly lowered now, although the short-term growth is slow, it can avoid the collapse of dissolved oxygen in the later stage, and ultimately the total yield is higher. This strategy of "sacrificing the present for the whole" can only be calculated by models with a time dimension.

And it should be able to make real-time corrections. Once the sensor detects that the actual metabolic rate deviates from the prediction, the model immediately re plans, forming a flywheel of "prediction → execution → feedback → re optimization".

4. Even small samples can run: Don't wait for "perfect data"

Many people are stuck in the first step: 'We have too little historical data, and AI cannot be trained.'. ”Not necessarily.

Transfer learning can be leveraged, such as pre training models with data from Escherichia coli and fine-tuning them with a small amount of yeast data; Alternatively, a combination of simulated data and a small amount of measured data can be used for joint training.

A team has achieved rapid adaptation of new bacterial strains with only 5% of traditional data volume.

The key is to start running first, and then iterate. We can never wait until the data is complete before starting.

Ultimately, the core of 'big data+AI+biological response' is not about showing off skills, but about turning uncertainty into computable, controllable, and optimizable certainty.

Those truly successful cases are not simply about buying an AI box and plugging it in, but rather the result of the deep integration of technology, equipment, data, and algorithms.

Whoever can align these four gears will be able to seize the opportunity in the next generation of biomanufacturing competition.

After all, in this era where even yeast is starting to "obey algorithm commands", experience is still valuable, but relying solely on experience is no longer enough.